Kubernetes 1.26 部署方案

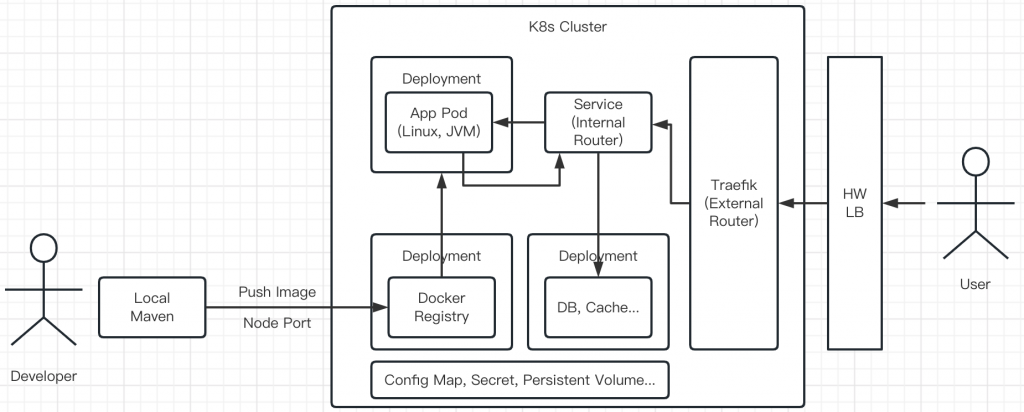

方案概述

- 在服务器(VM)部署Kubernetes环境后, 安装私有Docker镜像服务器, 通过账号密码验证. (使用ssh tunnel映射端口)

- 本地开发完成后, 使用Maven构建并推送镜像到私有Docker服务器. (使用ssh tunnel映射端口)

- 使用Lens客户端远程管理Kubernetes集群, 发布应用. VM需暴露一个K8s管理端口, 否则只能ssh到VM后用命令行管理k8s. (使用ssh tunnel映射端口)

- Traefik是Kubernetes的路由服务, 它可以把应用映射到二级路径. 比如/mavendemo

- 通过硬件负载均衡把外部请求转发到Traefik的端口后, 就可以通过域名和二级路径访问应用了. 比如http://node1.test.com/mavendemo

- 建议由硬件负载均衡器负责卸载https, Traefik也可以为每个应用绑定独立的https私钥.

服务版本

- Kubernetes: 1.26.1

- container: containerd 1.6.15

- cgroup: systemd

- CLI: runc 1.1.4

- CRI: crictl 1.26.0

- CNI: calico 3.25.0

- ingress controller: Traefik 2.9.6

- image registry: registry 2

- provision tool: helm 3

安装Kubernetes

参考文档:

中文步骤, 包括配置国内镜像服务器 http://www.cdyszyxy.cn/cwtj/616625.html

官方文档 https://kubernetes.io/zh-cn/docs/setup/production-environment/container-runtimes/

containerd, runc和CNI插件安装步骤 https://github.com/containerd/containerd/blob/main/docs/getting-started.md

crictl安装文档: https://github.com/kubernetes-sigs/cri-tools/blob/master/docs/crictl.md

在VM执行下列命令

转发 IPv4 并让 iptables 看到桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system依次在服务器直行下列命令

mkdir ~/setup

cd ~/setup

wget https://github.com/containerd/containerd/releases/download/v1.6.15/containerd-1.6.15-linux-amd64.tar.gz

sudo tar Cxzvf /usr/local containerd-1.6.15-linux-amd64.tar.gz

sudo mkdir -p /usr/local/lib/systemd/system/

sudo curl -o /usr/local/lib/systemd/system/containerd.service https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo systemctl daemon-reload

sudo systemctl enable --now containerd

containerd --version

curl -OL https://github.com/opencontainers/runc/releases/download/v1.1.4/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

ctr container list

sudo usermod -a -G root alvin

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.26.0/crictl-v1.26.0-linux-amd64.tar.gz

sudo tar zxvf crictl-v1.26.0-linux-amd64.tar.gz -C /usr/local/bin

sudo crictl config runtime-endpoint unix:///run/containerd/containerd.sock

sudo crictl config image-endpoint unix:///run/containerd/containerd.sock

crictl ps

sudo containerd config default > config.toml

vi config.toml

SystemdCgroup = true

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2"

sudo mkdir -p /etc/containerd

sudo cp config.toml /etc/containerd/

wget https://github.com/containernetworking/plugins/releases/download/v1.2.0/cni-plugins-linux-amd64-v1.2.0.tgz

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.2.0.tgz

sudo systemctl restart containerd

ctr container list

sudo swapoff -a

sudo vi /etc/fstab

//注释掉下面这行

#/swap.img none swap sw 0 0

sudo reboot

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo echo "deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main" >>/etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

sudo vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

Environment="KUBELET_CONFIG_ARGS=--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock --cgroup-driver=systemd --config=/var/lib/kubelet/config.yaml"下面的命令只在主VM执行

sudo kubeadm init --apiserver-bind-port=6443 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get node

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml下面命令在其它VM执行加入集群

sudo kubeadm join 192.168.1.160:6443 --token vwt9aw.4y0rln8e4kmjibym --discovery-token-ca-cert-hash sha256:4abf678a78a325cf94f94a3d38ba67edf75cc61e0b58ad3cc0b85f9b438af336启用crictl

usermod -a -G root alvin本地使用kubectl

复制服务器.kube/config的内容到本地相同目录, 安装kubectl后就可以访问了.

本地通过TLS Tunnel使用kubectl

修改~.kube/config

- cluster:

server: https://localhost:6443

insecure-skip-tls-verify: true

name: kubernetes开启tunnel

ssh user@server_ip -p ssh_port -i id_rsa -L 6443:localhost:6443使用Lens图形化管理Kubernetes集群

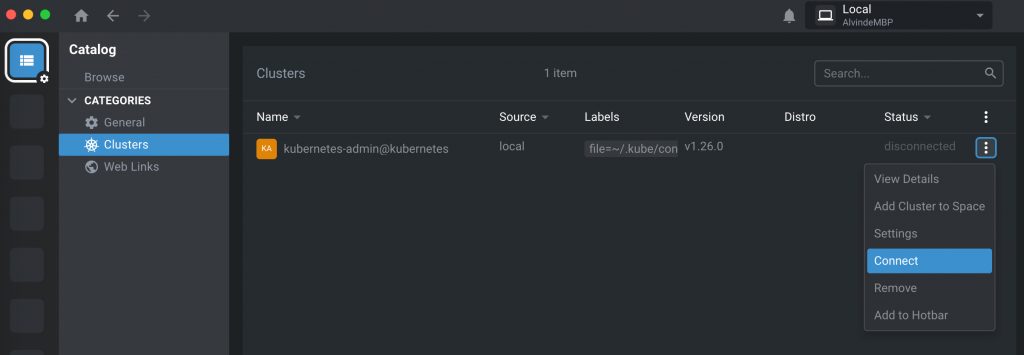

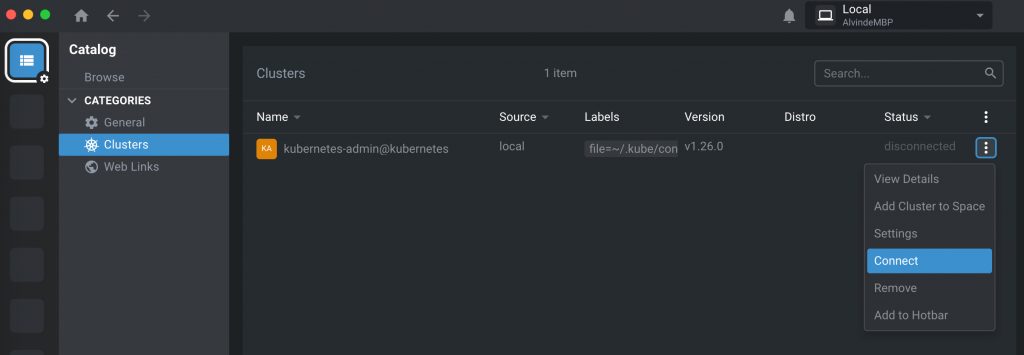

下载后注册为免费用户https://k8slens.dev/desktop.html

首页Catalog -> Cluster -> 点击加号, 打开.kube/config文件添加集群.

在Cluster里点击右侧三个点选择Connect就可以使用了.

安装Helm

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh安装Traefik

https://raw.githubusercontent.com/traefik/traefik/v2.9/docs/content/reference/dynamic-configuration/kubernetes-crd-definition-v1.yml

kubectl apply -f https://raw.githubusercontent.com/traefik/traefik/v2.9/docs/content/reference/dynamic-configuration/kubernetes-crd-rbac.yml

helm repo add traefik https://traefik.github.io/charts

helm repo update

helm install --namespace=kube-system traefik traefik/traefik安装Traefik后会自动生成service, 查看80端口对应的node port就是外网端口.

kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

traefik LoadBalancer 10.200.64.134 <pending> 80:31807/TCP,443:30138/TCP 100m配置对应的IngressRoute后就可以访问dashboard了.

kubectl create -f traefik_ingress.yml

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: traefik-dashboard

spec:

entryPoints:

- web

routes:

- match: Host(`node1.test.com`) && (PathPrefix(`/dashboard`) || PathPrefix(`/api`))

kind: Rule

services:

- name: api@internal

kind: TraefikService使用SSH隧道访问Traefik路由

把Traefik上配置的虚拟域名绑定到本地host文件

vi /etc/hosts

127.0.0.1 node1.test.com把Traefik的http服务端口(node port)映射到本地80端口

sudo ssh -L 80:localhost:31807 user@server_ip -p 13006 -i id_rsa访问http://node1.test.com/dashboard/

安装Docker Registry

生成docker密码, 把htpasswd放在node2的/opt/docker/auth, 后面要放在secret里面

sudo mkdir -p /opt/docker/auth

sudo chmod 777 -R /opt/docker

cd /opt/docker/auth

sudo apt install -y apache2-utils

sudo htpasswd -Bbn docker_user dockerpassword > htpasswd创建系统服务的命名空间

kubeclt create ns infra部署docker服务

kubectl create -f docker_registry.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: docker-registry

name: docker-registry

namespace: infra

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: docker-registry

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: docker-registry

spec:

containers:

- env:

- name: REGISTRY_AUTH

value: htpasswd

- name: REGISTRY_AUTH_HTPASSWD_PATH

value: /auth/htpasswd

- name: REGISTRY_AUTH_HTPASSWD_REALM

value: Registry Realm

image: registry

imagePullPolicy: Always

name: registry

ports:

- containerPort: 5000

name: web

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /auth

name: auth

- mountPath: /var/lib/registry

name: data

- name: config

mountPath: "/etc/docker/registry"

readOnly: true

nodeName: by-test-plat2

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /opt/docker/auth

name: auth

- hostPath:

path: /opt/docker/data

type: DirectoryOrCreate

name: data

- name: config

configMap:

name: docker-registry-config

items:

- key: "config.yml"

path: "config.yml"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: docker-registry

name: docker-registry

namespace: kube-system

spec:

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: web

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: docker-registry

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}获取docker registry地址

Cluster IP给kubernetes配置image用, NodePort需要映射出去给本地用外网IP pushi image

kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

docker-registry NodePort 10.200.174.243 <none> 5000:32299/TCP 67m测试Docker服务器

本地浏览器访问http://vm_public_ip:32299/v2/_catalog

输入用户名密码后可以登录

本地映射Docker服务器端口

ssh user@server_ip -p ssh_port -i id_rsa -L 5000:localhost:32299开发机Docker Desktop访问insecure registry

node1.test.com是hosts文件配置的本地ip, 5000端口是ssl隧道映射的docker服务的node port

不管docker registry的pod运行在哪台VM, Node Port都会映射到所有VM

开发机rancher-desktop insecure registry

在开发机运行

LIMA_HOME="$HOME/Library/Application Support/rancher-desktop/lima" "/Applications/Rancher Desktop.app/Contents/Resources/resources/darwin/lima/bin/limactl" shell 0

sudo vi /etc/conf.d/docker添加下面的配置

DOCKER_OPTS="--insecure-registries=node1.test.com:5000"开发机push Docker image到服务器Docker registry

docker tag tomcat:latest node1.test.com:5000/tomcat:latest

docker login node1.test.com:5000

docker push node1.test.com:5000/tomcat:latest服务器上检查image是否已经上传

crictl image ls开发机使用Maven build并pushi Docker image服务器docker registry

项目代码:https://github.com/kjstart/mavendemo

需要在本地Maven配置docker服务器的账号密码, 这里的server id和项目pom.xml里要对应

vi ~/.m2/settings.xml

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd">

<servers>

<server>

<id>docker-vm</id>

<username>alvin</username>

<password>alvin</password>

</server>

</servers>

</settings>配置好后就可以build项目了

mvn clean package docker:build docker:push -DskipTests注意Docker镜像命名不能用驼峰语法(ExampleName)会报Broken Pipe错误.

配置服务器containerd insecured http和auth

这步需要在每个VM(node)配置, 10.200.174.243是docker registry的ClusterIP, 请酌情修改.

sudo vi /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d"

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."10.200.172.167:5000".auth]

username = "docker_user"

password = "docker_password"

sudo mkdir -p /etc/containerd/certs.d/10.200.172.167:5000

sudo vi /etc/containerd/certs.d/10.200.172.167:5000/hosts.toml

server = "http://10.200.172.167:5000"

[host."http://10.200.172.167:5000"]

capabilities = ["pull", "resolve", "push"]

skip_verify = truesudo systemctl restart containerd

创建应用程序的命名空间

kubectl create ns app创建应用程序配置

apiVersion: v1

kind: ConfigMap

metadata:

name: apps-common-config

namespace: app

data:

# property-like keys; each key maps to a simple value

microservice-integration-server-url: "http://localhost:5675"

minio-url: "https://minio.com"

custom-api-url: "https://custom-api.com:80"

custom-api-alg-url: "http://localhost:18081"

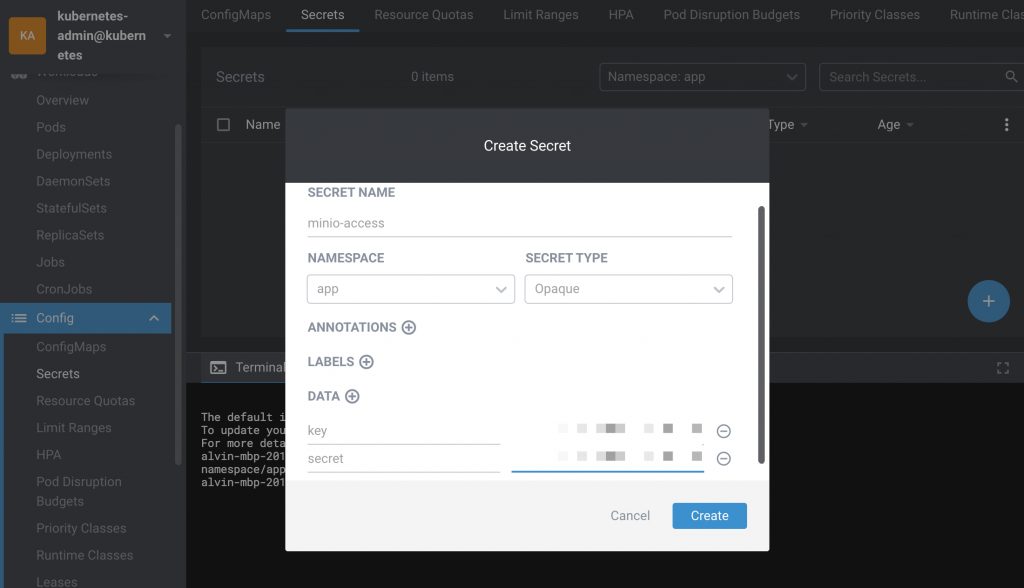

algorithm-url: "http://localhost:18081"创建应用程序秘钥

apiVersion: v1

data:

key: xxxx

secret: xxxx

kind: Secret

metadata:

name: minio-access

namespace: app

type: Opaque

---

apiVersion: v1

data:

ip: xxx

password: xxxx

username: xxxxx

kind: Secret

metadata:

name: database

namespace: app

type: Opaque也可以用Lens创建, data的值直接填原文,不用base64编码

部署一个项目

这是demo项目https://github.com/kjstart/mavendemo

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: maven-demo

name: maven-demo

namespace: default

spec:

progressDeadlineSeconds: 600

replicas: 1

selector:

matchLabels:

app: maven-demo

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: maven-demo

spec:

containers:

- image: 10.200.174.243:5000/maven-demo:latest

imagePullPolicy: Always

name: maven-demo

ports:

- containerPort: 8080

name: web

protocol: TCP

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

---

apiVersion: v1

kind: Service

metadata:

namespace: default

name: maven-demo

labels:

app: maven-demo

spec:

ports:

- port: 9090

name: web

selector:

app: maven-demo

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: maven-demo

namespace: default

spec:

entryPoints:

- web

routes:

- match: Host(`node1.test.com`) && PathPrefix(`/mavendemo`)

kind: Rule

services:

- name: maven-demoe

port: 9090访问虚拟机上运行的服务

如果一些服务不迁移到k8s上,可以用下面的方法访问

比如要访问192.168.1.160:8161

kubectl create -f mysql-vm.yml

apiVersion: v1

kind: Endpoints

apiVersion: v1

metadata:

name: mysql-vm #此名字需与 Service的 metadata.name 的值一致

namespace: infra #在固定的命名空间下

subsets:

- addresses:

- ip: 192.168.113.141 #宿主机IP

ports:

- port: 13306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-vm #此名字需与 Endpoints的 metadata.name 的值一致

namespace: infra #在固定的命名空间下

spec:

ports:

- port: 13306 k get svc -n infra

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

docker-registry NodePort 10.200.172.167 <none> 5000:32299/TCP 42h

mysql-vm ClusterIP 10.200.135.163 <none> 13306/TCP 14s然后在k8s pod里就可以用Service的CLUSTER-IP访问服务了. 以后迁移服务, Service的IP不变

外网访问应用程序

后续要把公网域名映射到VM的Traefik端口, IngressRoute的域名做对应修改.

应用的端口在container和service暴露, 并通过IngressRoute映射为路径, 这里是/mavendemo

http://node1.test.com:31708/mavendemo/hello

内网访问应用程序

使用service定义的服务名和应用程序暴露的端口来访问, 在container和service层都要暴露端口

添加新的Node(VM)到集群

在主节点生成token,24小时内可重复使用

kubeadm token create

在新节点完成K8s安装后(参考上面的安装Kubernetes部分),运行下面的命令

kubeadm join 192.168.113.141:6443 –token 你的token –discovery-token-ca-cert-hash sha256:f96f75de5775c1a66caa6ad5cb8783f4251db5c3137ac42c511f794b95afac33

允许K8s master节点部署应用

master节点(control plane)默认是不会部署应用程序的pod的.

如果需要部署, 编辑master节点, 删除NoSchedule的taint就可以了.

kubelet常用alias

放在.bash_profile里, 可简化操作

alias k=kubectl

alias kc='kubectl config use-context'

alias kdd='kubectl delete pod'

alias kl='kubectl logs -f'

alias kg='kubectl get pod'

alias kgg='kubectl get pod | grep'

alias kga='kubectl get pods --all-namespaces'

alias kd='kubectl describe pod'

alias ke='func() { k exec -it $1 -- sh;}; func'

alias kubectl='_kubectl_custom(){ if [[ "$1" == "ns" && "$2" != "" ]]; then kubectl config set-context --current --namespace=$2; elif [[ "$1" == "ns" && "$2" == "" ]]; then kubectl get ns; elif [[ "$1" == "ns" && "$2" == "" ]]; then kubectl config get-contexts | sed -n "2p" | awk "{print \$5}"; else kubectl $*; fi;}; _kubectl_custom'参考文档

http://www.cdyszyxy.cn/cwtj/616625.html

https://kubernetes.io/zh-cn/docs/setup/production-environment/container-runtimes/

https://github.com/containerd/containerd/blob/main/docs/getting-started.md

https://github.com/kubernetes-sigs/cri-tools/blob/master/docs/crictl.md

https://helm.sh/docs/intro/install/

https://doc.traefik.io/traefik/getting-started/install-traefik/

https://blog.csdn.net/avatar_2009/article/details/109807878

https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration

https://github.com/containerd/containerd/blob/main/docs/hosts.md

https://blog.csdn.net/weixin_44742630/article/details/126095739

https://www.cnblogs.com/yangmeichong/p/16661444.html

https://blog.csdn.net/weixin_43616190/article/details/126415601

https://kubernetes.io/docs/concepts/configuration/configmap/

https://kubernetes.io/zh-cn/docs/concepts/configuration/secret/

https://blog.csdn.net/Thomson_tian/article/details/117218281

0 Comments